Bond - A Spy-Based Testing and Mocking Library

Testing plays a key role in the development of any software package, especially for large or complex systems with many contributors. Unit tests allow you to perform sanity checks, verify the expected behavior of your program, quickly detect errors, change one area of a program with confidence that you didn't break any other, and so on; the benefits are enormous. But, writing tests can be difficult and tedious; you have to create the proper test environment, exercise the relevant components, and then validate that they ran correctly. The typical pattern of unit testing involves calling some function or module, obtaining results, and then using a series of assertEquals-style comparisons to check that the result is as expected. For some simple functions a single assertion may be sufficient, but the situation is often more complex, requiring numerous assertions to be thorough in validation and achieve confidence that the behavior is correct. Even worse, if any of the expected behavior changes, you have to individually adjust all of the assertions, meaning there is a tradeoff between thoroughness of the test validation and ease of maintenance as the test changes.

Graph showing hypothetical developer effort for a set of complex unit tests as a project progresses. The tests are initially developed in January; Bond requires slightly less effort due to a reduction in the number of assertions that need to be created manually. In April, a significant change to the behavior of the system under test is made, causing a large amount of developer effort to update the traditional unit tests. The ease of reconciling differences between old and new test behavior means that updating the tests with Bond will require very little developer effort. Again in August, a more minor change is made to the tests, and Bond requires less developer effort to update. Over the course of a few behavioral changes, the benefits of Bond become much more apparent than the initial savings. Note that this is a hypothetical situation intended only to demonstrate where Bond can be useful, but we plan to perform a more detailed study of this phenomena using a real project in the near future.

We are introducing a new testing library, Bond, which aims to make this process more efficient and developer-friendly by transitioning away from traditional assertions and instead using what we refer to as spy-based testing. Rather than explicitly specifying the expected value, you simply "spy" the object(s) you wish to make assertions about - similar to using a printf to see what a value is, but done in a more careful way. The first time this happens, Bond will display the spied objects to you in a serialized form called an "observation" and ask if the output is what you expect; if it is, Bond will save this value as a reference. For future test executions, spy output will be compared against this reference value to ensure that the behavior hasn't changed. This provides the same assertion power as a unit test, but without having to type out large blocks of assertions. The real power comes into play when the expected behavior changes; rather than manually changing test values, you are presented with an interactive merge tool which displays the differences between expected and current output. You can view the changes, see if they match the new expected behavior, and easily accept some or all of them as the new reference.

In addition to using spying as a way to replace assertions, you can also spy intermediate values and outside interactions within production code: writes to a database, HTTP requests, command line tool executions, etc. By placing calls to spy within your code, any time you run a test with Bond, these calls will appear in the observation log, allowing you to trace execution and easily monitor how your code is interacting with outside services. This has been used in the past to monitor complex interactions which result in 100s of observations - too much information to make individual assertions about, but at the level that can easily be verified by reading through the list of observations and checking that the system is behaving as expected. In subsequent test runs, you have the power of detecting changes to any of those numerous observations, as opposed to the few assertions that would probably be written in a traditional unit test.

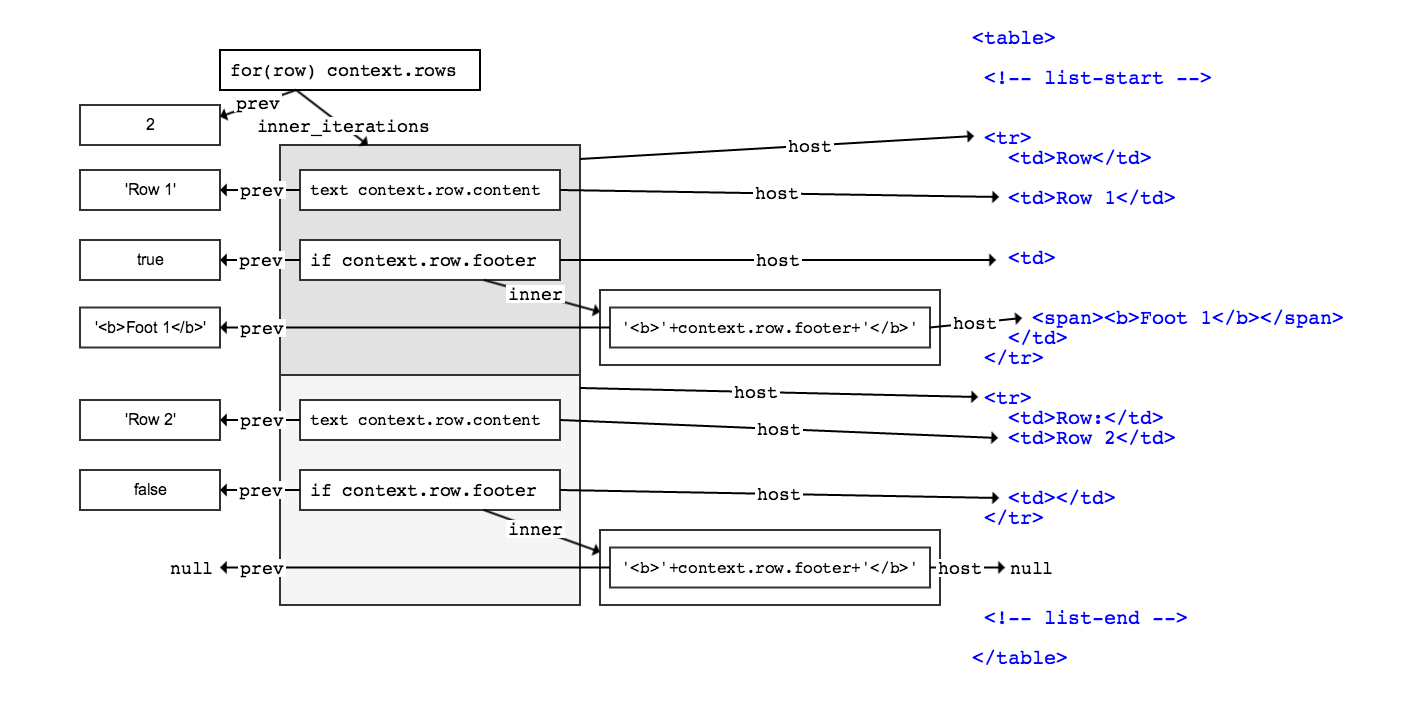

Finally, Bond has a fully integrated mocking library, allowing you to simultaneously mock out and spy function calls at arbitrary points within your program. If a function is marked as a "spy point", any call to that function will be spied, recording its call arguments and (optionally) its return value. If you then deploy an "agent" to monitor that spy point, the agent can take various actions when the function is called: mock out a return value, execute arbitrary auxiliary functions, allow the function call to continue as normal after spying its call arguments, etc. You can also mark such spy points as requiring mocking, which can be useful on functions which are dangerous to execute during testing, and execution will automatically halt if the function is ever called from a test without having an agent prepared to perform mocking.

Bond was originally developed by George Necula for use in projects at Conviva and has been used there for a number of years. Recently, we rewrote the project from the ground up, taking into account many lessons learned from the first version, and Bond is now available for free on GitHub in Python, Ruby, and Java.

Usage and Examples

We show only some simple examples of how Bond is used in Python; for more detailed examples in all three languages, as well as tutorials which walk you through how to add Bond to a project, we encourage you to look at the Bond documentation. You will also find some more detailed explanations of the inner workings of Bond.

Basic Unit Test Spying

Imagine that you are testing some function which constructs a data dictionary to be sent off as an HTTP request. Let's say the content is your current location and looks like this:

{

lat: float,

lon: float,

accuracy: float,

user_id: string

}

Using Bond, we can easily check the accuracy of the output as such:

def test_location_dictionary(self):

data = create_location_dictionary("some input data")

bond.spy('create_location_dictionary', output=data)

When we first run the test, we will receive a message like:

There were differences in observations for MyTest.test_location_dictionary:

--- reference

+++ current

@@ -0,0 +1,11 @@

+[

+{

+ "__spy_point__": "create_location_dictionary",

+ "output": {

+ "accuracy": 10.5000,

+ "lat": 27.6500,

+ "lon": 90.4500,

+ "user_id": "user1"

+ }

+}

+]

There were differences in observations for MyTest.test_location_dictionary:

Do you want to accept the changes (MyTest.test_location_dictionary)? ([k]diff3 | [y]es | [n]o):

With a single spy statement, we are able to completely view the state of the object, and determine whether or not it is correct. You can see that the changes are displayed as a diff (against nothing, in this case). This same type of message will highlight exactly what parts of the observations have changed if the output ever falls out of sync with the accepted reference value.

Spy Points and Mocking

Now let's imagine that create_location_dictionary actually makes a call to an external GPS service to retrieve your location data. We don't want this to happen during testing, so we instrument the function with a spy point:

@bond.spy_point(spy_point_name='location_dictionary')

def create_location_dictionary(data):

# ...

Now in our test code, we deploy an agent, which will intercept calls to create_location_dictionary and instead return a prepared mock result:

def test_func_which_uses_create_location_dictionary(self):

mock_location = # ...

bond.deploy_agent('location_dictionary', result=mock_location)

output = func_which_uses_create_location_dictionary()

# ...

Now, when the function being tested tries to call create_location_dictionary, the mock location will be returned. Additionally, calls to create_location_dictionary will appear in the observation log, so you can easily track when and how calls to the external GPS service are made.

Where We Go From Here

While Bond can be very useful as-is, we think there is much more to explore in this new style of testing and hope to continue to expand Bond. One area we are specifically interested in is entering into a "replay-record" mode where Bond can assist you in easily generating the response data for your mocks. This can be especially useful when mocking HTTP requests, whose responses may be large and complex. We envision the ability to slowly run through the execution of your code against a live service, denote that the response received is a correct mock response, and have this be automatically saved to be used as the mock response in future test executions. While some mocking libraries provide similar functionality (e.g. VCR, Betamax), they require you to copy-paste mock data, which may be a large block of text, into your test. We believe Bond is in a unique position to provide this functionality, as it already provides a clean separation of test data vs. test code, as well as easy ways to create and change test data.

Bond is still under active development and we would love to hear your feedback; please leave us a comment!